How to Deploy Docker Containers to Production

-

Inteleto

Inteleto - 30 Jan, 2025

We’re all used to running our applications using Docker containers and docker-compose in the development environment, and it works perfectly. No OS variables or packages to go wrong. But now, we’ve finished the MVP and plan to go to production ASAP, so we’re looking for a way to make our application serve not only us, but the world. Now we enter the world of container hosting and the complexities that surround a healthy infrastructure. To help you in this situation, we’ve prepared this guide with our recommendations and an excellent option for hosting containers based on actual real-world web projects.

Image building and serving

The first challenge we encounter is serving our image and managing deployments. If you’ve already pulled your GitHub repository in production, made a few changes using vim, ran docker-compose up —build and saw your CPU usage going to 100% - it’s okay - we feel your pain 😄, but you might agree that there should be a better way of doing it, right?

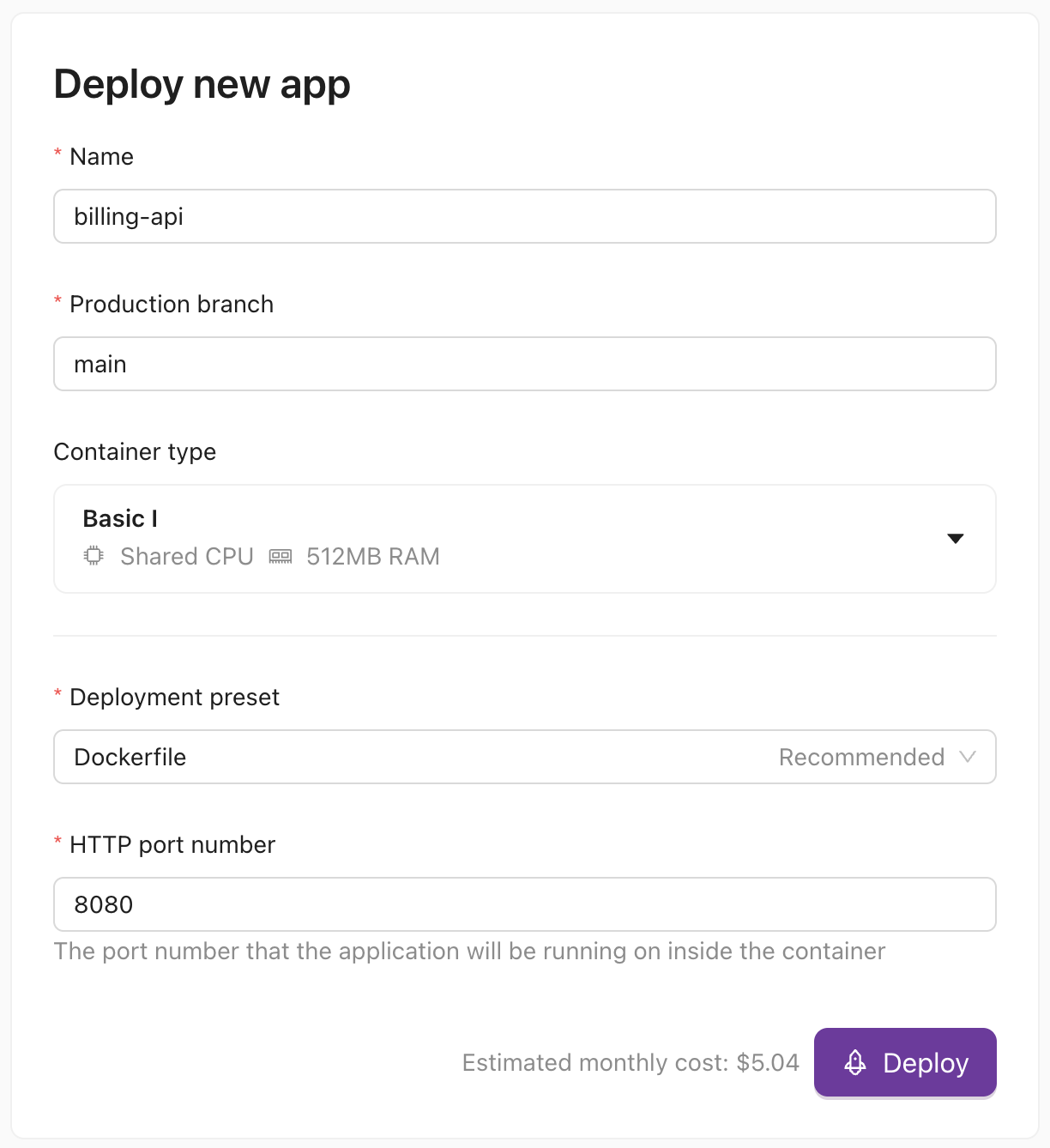

Ideally, you would want a way to create a Continuous Integration and Continuous Deployment pipeline that checks your code, runs the automated tests, creates a docker image, pushes it into a registry (such as Docker Hub), provisions your server, pulls that generated image, and updates your application to run the latest version. That’s what most people recommend, and that’s almost exactly what we enable you to do.

On Inteleto, we take care of all those boring steps. You just have to link your GitHub repository, and we do all of the rest. It may look like magic, but there’s a lot of business rules going on in the background for enabling that.

Updating and publishing new versions

If you change the color of the button in your UI, all it takes is a git push command, and in about 5 minutes, that newer version is already running in production.

At the end, we’re able to develop our application, and one of the challenges to publish it to production is overcome. Now… how do we access it?

Serving the application publicly

Just having our container running isn’t enough to let users access it. To allow public access, there’s a few infrastructure tasks we need to do: Provision a public IP address, allow the input in the firewall, forward the physical machine port to the running container, managing DNS records and SSL certificates (to get that essential https:// URL).

Just by doing the previous step on Inteleto, our application is already running publicly, and we even have a domain name with SSL to test our app.

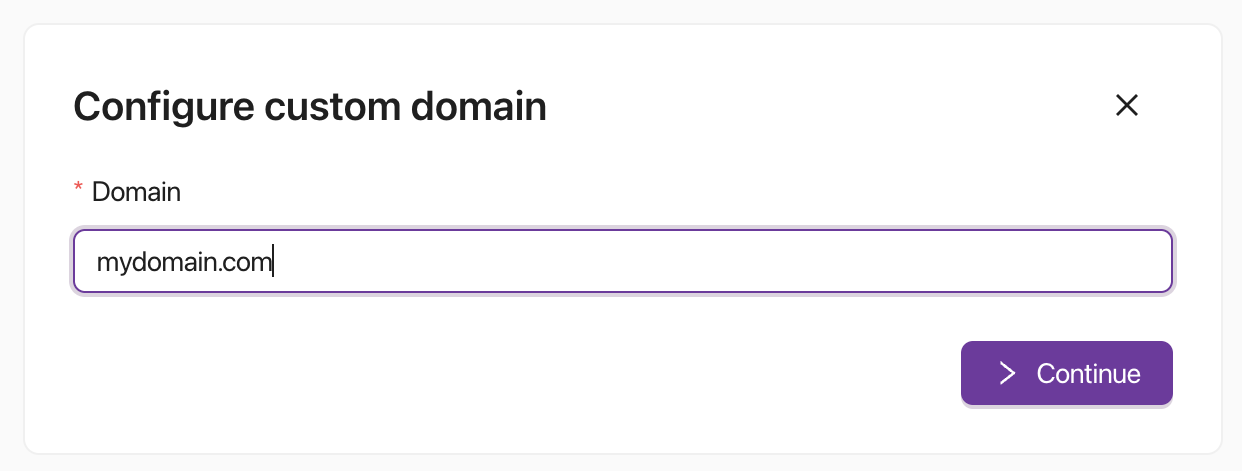

Bringing your own domain

It would be a lot better if we could let users connect to the application using our own domain, right? That’s why on Inteleto you can set up a custom domain, and it will provision all of the necessary resources and even a free-forever SSL certificate.

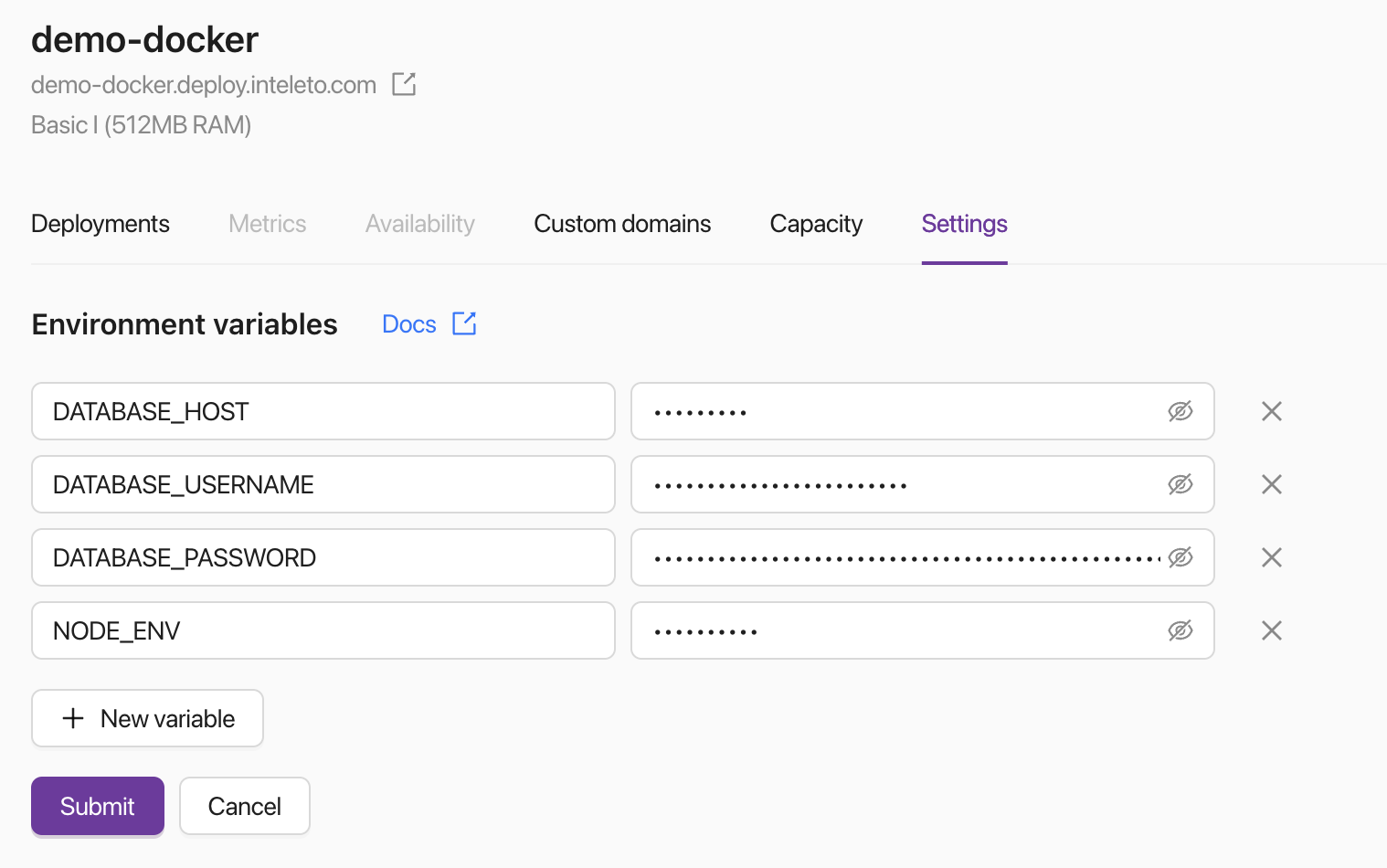

Environment variables

Now it’s time to connect to the database, and since we’re good engineers and never store sensitive information in the code, we need operating system environment variables to inject those credentials for us in the container environment.

Everything we don’t want is those .envs, database table for secrets or hardcoded strings in the code. What we want is a secure, private and encrypted way of setting those variables and letting the system safely inject them in our container.

To do this on Inteleto, we just have to reach the Settings tab and set the necessary variables.

Insecure setup

If you’re using the .env file in production to store the environment variables, that’s also a big security problem. Ideally you should use environment variables provided by external services (such as Inteleto).

Logs, monitoring and alerts

We absolutely want to closely track the status of a production application in an automated way that we can see the relevant logs, track the resource usage of the application and receive alerts when something strange happens.

That’s also built-in in Inteleto. By default, deployed applications will collect every log, provide charts for CPU and RAM usage and there’s the option to configure availability measurement and alerting, a service that will periodically check your application and take action if something isn’t healthy.

Scalability

Now that we’re increasing the number of active customers, we start seeing an elevated usage of CPU and RAM in the application, and we also receive alerts saying that that application is crashing during peak time, and we should buy a better server to serve our customers and stop them from churning.

Thankfully, Inteleto allows us to scale our application in two ways using just a few clicks, and with zero-downtime.

Vertical scaling

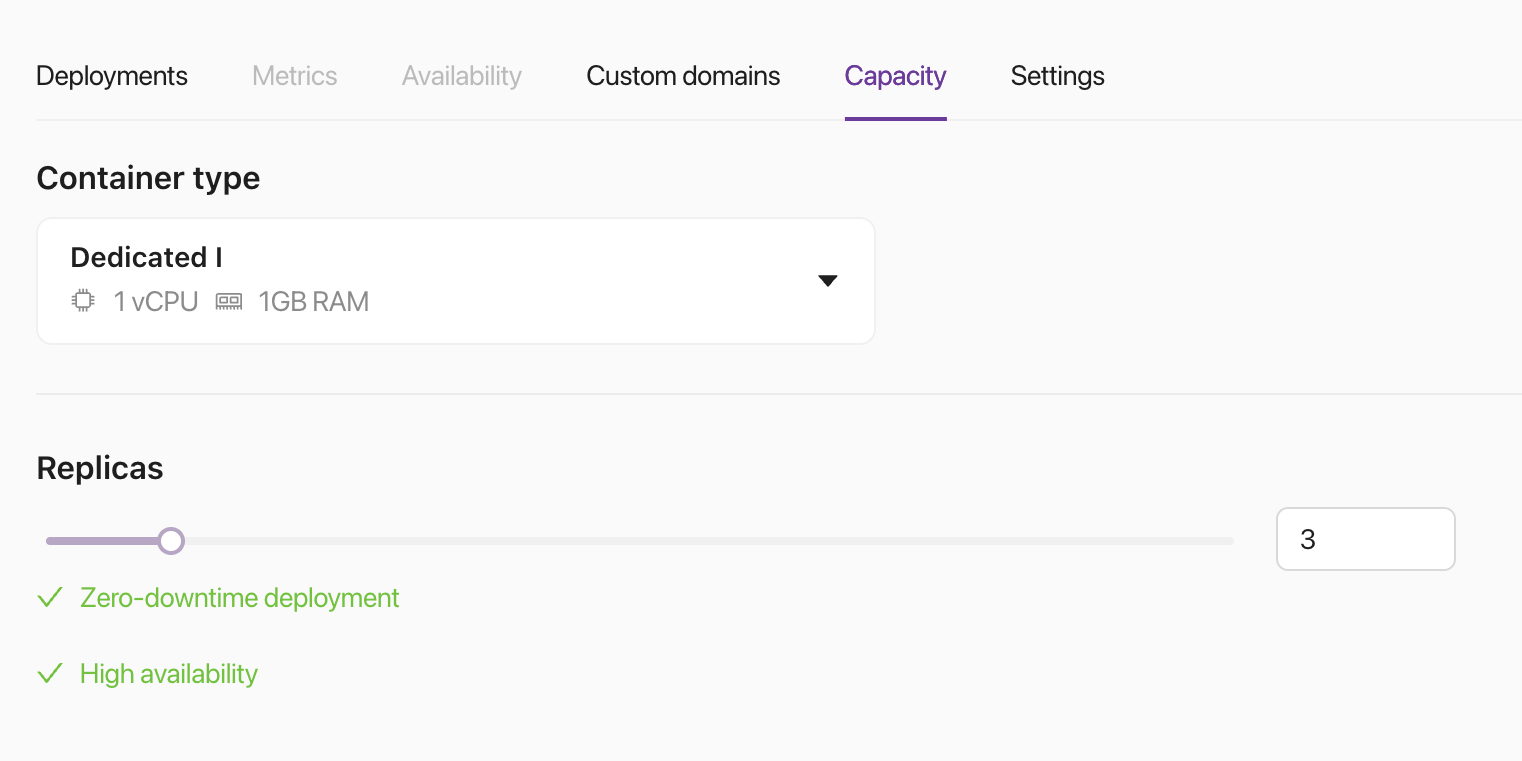

In the Capacity tab, we can change the container type of the application, which will allow us to allocate more resources to serve the user requests. That increase in available CPU and RAM is called vertical scaling, since we’re “stacking” more resources in the currently running container. When you change the type of the container, Inteleto automatically provision the necessary resources for your application.

Horizontal scaling

Also in the Capacity tab we can change the number of replicas that are running (horizontal scaling). By increasing the number of replicas, Inteleto smartly balances the load (by directing user requests) to the containers. By doing that, we highly increase the amount of customers we can serve.

When you change that number on Inteleto, the resources are provisioned and your application is replicated. There’s never downtime while doing that, since you’ll always have at least one replica serving requests.

That’s the most recommended way of scaling applications, because by using the vertical scaling, we have two giant problems:

- Sometimes our application needs to restart when changing the CPU and RAM

- Every system has a maximum number of CPU and RAM available, which in Inteleto is 4vCPU and 8GB RAM, and that’s a common cap for containers.

Scaling horizontally, however, has theoretically no limits, since when you reach the maximum amount of CPU and RAM, you can create another server and distribute the load. Also, many applications (mainly the ones that have Garbage Collection) start to benefit less from the higher resource as they grow. For example, if we’re using a runtime with garbage collection and we have 64GB of allocated objects, the system could stop the world for 1 second to clean up the dead allocations. That’s a huge problem for applications.

NodeJS recommendation

NodeJS is limited by default to 512MiB of RAM for the running process, since that’s their recommendation to serve using one NodeJS process (for most of the general workloads). Based on that information, the runtime is - by default - configured to run better when distributed across many processes.

Security

Another topic that matters a lot for serious applications is security. The chance that someone manually deploying a Docker container will be using the best practices and infrastructure for security are odd. Even if you’re correctly configuring the firewall, regularly patching the OS and have a good enterprise-grade server endpoint (successor of the common antiviruses), you’ll still be limited by the amount of traffic your machine and firewall can handle during a Denial of Service attack.

That’s why it’s better to trust a platform that comes by default with the highest security environment and with a team totally focused on keeping everyone safe.

High availability and zero-downtime deployment

Another essential feature for production applications are two subtle necessities that you can’t easily achieve with Docker, and that make a lot of difference.

When you deploy a change in your app, generally it would stop, apply the changes and start again. That stops the pending requests that your users are making, and your application doesn’t work for some amount of time. After it starts, there’s more the time it takes to actually connect to the database and fill up the cache.

With Inteleto, if you have 2 or more replicas, when deploying it will create another replica of your application with the newer version, wait 60 seconds (by default), include that new container in the request serving load balance, stop sending requests for the old containers, wait 60 seconds (by default) and delete that old container. Inteleto will do this for every running container until your application is fully deployed.

For high availability, if you’re running 3 or more replicas, Inteleto will always place them as far as possible while still maintaining low latency connectivity between them. That means that, for example, each container will be in a separate rack. By doing that, we highly decrease the change of your application being offline. Also, 2 instances of your application could fail while all of the traffic will be successfully served by the third replica.

Our purpose as a container hosting platform

After hosting many containerized microservices applications for different customers using multiple cloud providers (such as AWS, GCP and Azure) and having to maintain them, we dediced to found Inteleto. It is build for developers by people that had a lot of practice hours running containerized workloads. Our purpose is to offer the most effortless, easy and secure environment for hosting containers, in a way that just connecting your GitHub repository is enough to have your application running in production.

If all of the previously mentioned conveniences makes sense to you, please Sign Up to Inteleto and start deploying your application with a few clicks.